Apple’s CSAM Scanning Plan: Privacy Protector or Pandora’s Box?

In August 2021, Apple announced a new initiative aimed at combating the spread of Child Sexual Abuse Material (CSAM) by scanning photos stored in iCloud. The proposed system would use on-device algorithms to match images against a database of known CSAM hashes provided by organizations like the National Center for Missing & Exploited Children (NCMEC). Apple emphasized that the system was designed with user privacy in mind, ensuring that only images matching known CSAM would be flagged.

However, the announcement was met with immediate backlash from privacy advocates, cryptographers, and civil liberties organizations. Critics argued that introducing such scanning mechanisms, even with good intentions, could set a dangerous precedent. The concern was that once a system capable of scanning user content was in place, it could be repurposed or expanded to monitor other types of content, potentially leading to broader surveillance.

“Apple will use its CSAM detection technology... scans, flags, and reports photos on iCloud storage for containing known child sexual abuse material.”

— Reader's Digest

In response to the widespread criticism, Apple decided to delay the rollout of the CSAM scanning feature in September 2021. The company stated that it would take additional time to collect input and make improvements before releasing the feature. Despite these assurances, concerns persisted about the potential for abuse and the implications for user privacy.

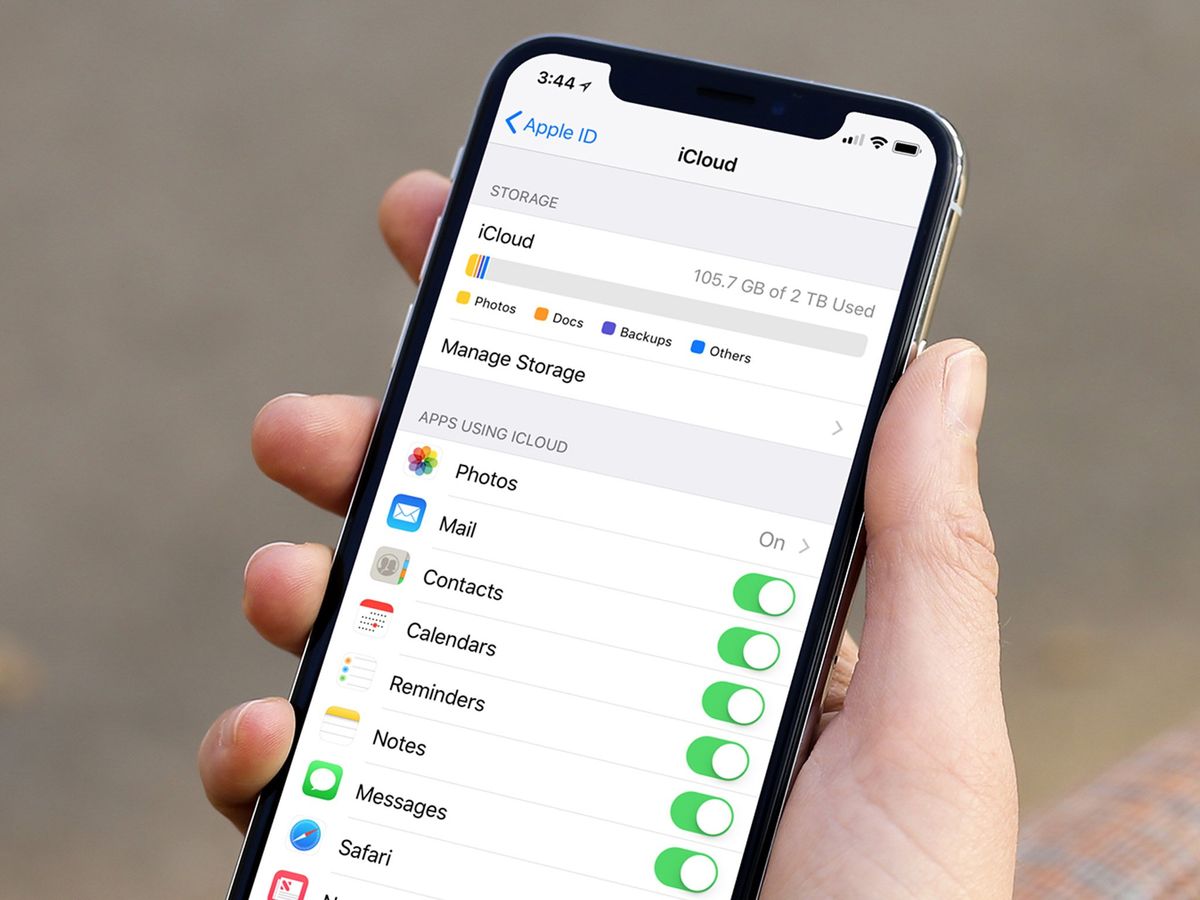

By December 2022, Apple quietly removed all references to the CSAM scanning feature from its website, signaling a shift in strategy. Instead, the company focused on enhancing its "Communication Safety" features, which aim to protect children by detecting sensitive content in messages without compromising overall user privacy. Apple also announced plans to expand end-to-end encryption across more of its services, reinforcing its commitment to user data protection.

While Apple's initial goal was to protect children from exploitation, the controversy surrounding the CSAM scanning proposal highlights the complex balance between safeguarding users and preserving privacy. The episode serves as a reminder of the challenges tech companies face when implementing features that, while well-intentioned, may have unintended consequences.